If

you are familiar with Rest API, you must know about HTTP calls created

for getting and posting data from the client to the server. What if you

wish to create a REST API client in PHP? Your answer would be to go with

CURL. CURL is the most widely used method to make HTTP calls but it

contains several complicated steps.

Let’s see a simple CURL request in PHP:

$url = “https://api.cloudways.com/api/v1”

$resource = curl_init($url);

curl_setopt($ch, CURLOPT_HTTPHEADER, [‘Accept:application/json, Content-Type:application/json’]);

curl_setopt($ch, CURLOPT_CUSTOMREQUEST, ‘GET’);

You need to call the curl_setopt() method

to define the header and the particular HTTP verb like GET, POST, PUT,

etc. It does look pretty complicated. So, what is a better and robust

alternative?

Here comes Guzzle.

Let’s see how Guzzle creates a request:

$client = new GuzzleHttp\Client();

$res = $client->request(‘GET’, ‘https://api.cloudways.com/api/v1’, [

‘headers’ => [

‘Accept’ => ‘application/json’,

‘Content-type’ => ‘application/json’

]])

You

could see that it is simple. You just need to initialize the Guzzle

client and give HTTP verbs and a URL. After that, pass the array of

headers and other options.

Understand the Guzzle Client

Guzzle

is a simple PHP HTTP client that provide an easy method of creating

calls and integration with web services. It is the standard abstraction

layer used by the API to send messages over the server. Several

prominent features of Guzzle are:

- Guzzle can send both synchronous and asynchronous requests.

- It provides a simple interface for building query strings, POST requests, streaming large uploads & downloads, uploading JSON data, etc.

- Allows the use of other PSR7 compatible libraries with Guzzle.

- Allows you to write environment and transport agnostic code.

- Middleware system allows you to augment and compose client behavior.

Install Guzzle In PHP

The preferred way of installing Guzzle is Composer. If you haven’t installed Composer yet, download it from here

Now to install Guzzle, run the following command in SSH terminal:

composer require guzzlehttp/guzzle

This

command will install the latest version of Guzzle in your PHP project.

Alternatively you can also define it as a dependency in the composer.json file and add the following code in it.

{

“require”: {

“guzzlehttp/guzzle”: “~6.0”

}

}

After that, run the composer install command. Finally you need to require the autoloader and added some more files to use Guzzle:

require ‘vendor/autoload.php’;

use GuzzleHttp\Client;

use GuzzleHttp\Exception\RequestException;

use GuzzleHttp\Psr7\Request;

The

installation process is over and now it’s time to work with a real

example of creating HTTP calls with an API. For the purpose of this

article, I will work with Cloudways API.

What You Can Do With the Cloudways API

Cloudways

is a managed hosting provider for PHP, Magento, WordPress and many

other frameworks and CMS. It has an API that you could use for

performing CRUD operations on servers and applications. Check out

popular use cases of the Cloudways API to see how you could integrate it into your projects.

In this article, I am going to create HTTP calls to perform specific operations with Guzzle on Cloudways server.

Create the HTTP Requests In Guzzle

As

I mentioned earlier, creating HTTP requests in Guzzle is very easy; you

only need to pass the base URI, HTTP verb and headers. If there is an

authentication layer in the external API, you can also pass these

parameters in Guzzle. Similarly, Cloudways API needs email and API key

to authenticate users and send the response. You need to sign up for a Cloudways account to get your API credentials.

Let’s start by creating a CloudwaysAPIClient.php file to set up Guzzle for making HTTP calls. I will also create a class and several methods using HTTP calls in them.

The URL of the API does not change so I will use const

datatype for it. Later on, I will concatenate it with other URL

suffixes to get the response. Additionally, I have declared variables $auth_key,$auth_email which will hold the authentication email and the API key. $accessToken will hold the temporary token which will be renewed every time.

Class CloudwaysAPIClient

{

private $client = null;

const API_URL = “https://api.cloudways.com/api/v1";

var $auth_key;

var $auth_email;

var $accessToken;

public function __construct($email,$key)

{

$this->auth_email = $email;

$this->auth_key = $key;

$this->client = new Client();

}

}

Create a Post Request to get Access Token

The access token will be generated from this URL: https://api.cloudways.com/api/v1/oauth/access_token every time I access the API. This will be set in $url with additional data array which holds auth credentials. Later on, I created a POST request

with the base URL and query string. The response will be decoded and

access token is saved to be used within the methods.

public function prepare_access_token()

{

try

{

$url = self::API_URL . “/oauth/access_token”;

$data = [‘email’ => $this->auth_email,’api_key’ => $this->auth_key];

$response = $this->client->post($url, [‘query’ => $data]);

$result = json_decode($response->getBody()->getContents());

$this->accessToken = $result->access_token;

}

catch (RequestException $e)

{

$response = $this->StatusCodeHandling($e);

return $response;

}

}

Here the POST request for getting access token is completed. Additionally, If you observed in the exception handling, I declared a method StatusCodeHandling($e), which will take care of the response codes (HTTP codes like 404, 401, 200 etc), and throw a related exception.

public function StatusCodeHandling($e)

{

if ($e->getResponse()->getStatusCode() == ‘400’)

{

$this->prepare_access_token();

}

elseif ($e->getResponse()->getStatusCode() == ‘422’)

{

$response = json_decode($e->getResponse()->getBody(true)->getContents());

return $response;

}

elseif ($e->getResponse()->getStatusCode() == ‘500’)

{

$response = json_decode($e->getResponse()->getBody(true)->getContents());

return $response;

}

elseif ($e->getResponse()->getStatusCode() == ‘401’)

{

$response = json_decode($e->getResponse()->getBody(true)->getContents());

return $response;

}

elseif ($e->getResponse()->getStatusCode() == ‘403’)

{

$response = json_decode($e->getResponse()->getBody(true)->getContents());

return $response;

}

else

{

$response = json_decode($e->getResponse()->getBody(true)->getContents());

return $response;

}

}

The main client class is now completed. I will extend it to create more HTTP requests for different cases.

Create a GET Request to Fetch All Servers

Once the User is authenticated, I can fetch all my servers and applications from Cloudways. /server is the suffix concatenated with the base URI. This time, I will attach the accessToken with Authorization string in Guzzle header to fetch all servers in JSON response. To do this, create a new method:

Public function get_servers()

{

try

{

$url = self::API_URL . “/server”;

$option = array(‘exceptions’ => false);

$header = array(‘Authorization’=>’Bearer ‘ . $this->accessToken);

$response = $this->client->get($url, array(‘headers’ => $header));

$result = $response->getBody()->getContents();

return $result;

}

catch (RequestException $e)

{

$response = $this->StatusCodeHandling($e);

return $response;

}

}

Now create index.php file and include CloudwaysAPIClient.php at the top. Next, I will declare my API key and email, passing it to the class constructor to finally get the servers.

include ‘CloudwaysAPIClient.php’;

$api_key = ‘W9bqKxxxxxxxxxxxxxxxxxxxjEfY0’;

$email = ‘shahroze.nawaz@cloudways.com’;

$cw_api = new CloudwaysAPIClient($email,$api_key);

$servers = $cw_api->get_servers();

echo ‘<pre>’;

var_dump($servers);

echo ‘</pre>’;

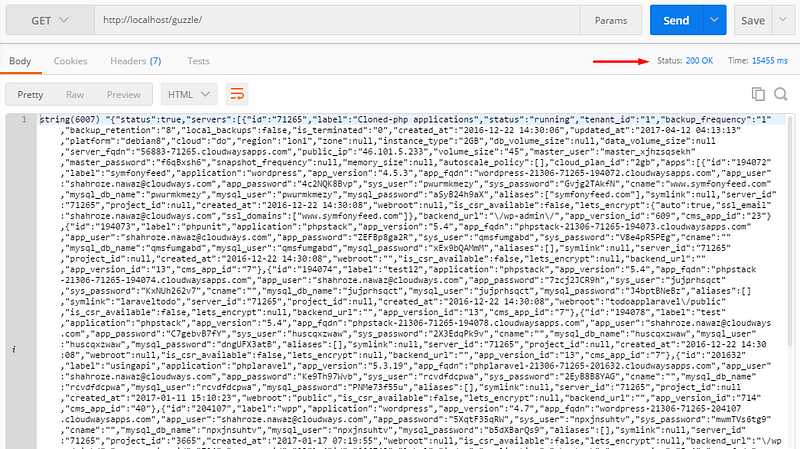

Let’s test it in Postman to verify that the information and right response codes are being fetched.

So

all my servers hosted on the Cloudways Platforms are being fetched.

Similarly, you can create new methods with HTTP calls to get

applications, server settings, services and etc.

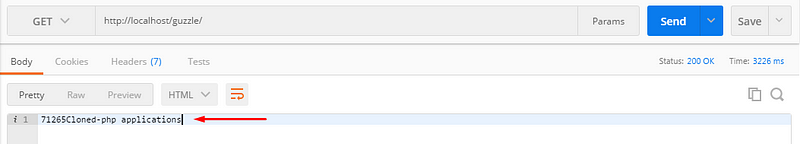

Let’s create a PUT call to change the label of the server which is cloned-php applications

at the moment. But first, I need to get the server ID & label

because this information will be used as an argument. To get the server

ID, create a foreach loop in the index.php file:

foreach($servers->servers as $server){

echo $server->id;

echo $server->label;

}

Now, if I hit the API, it will fetch the server id and label.

Create a PUT Request to Change Server Label

Now

to change the server label, I need to create a PUT call in Guzzle. I

will extend the class with a new method. Remember that server id and

label are two necessary parameters that will be passed in the method.

public function changelabel($serverid,$label)

{

try

{

$url = self::API_URL . “/server/$serverid”;

$data = [‘server_id’ => $serverid,’label’ => $label];

$header = array(‘Authorization’=>’Bearer ‘ . $this->accessToken);

$response = $this->client->put($url, array(‘query’ => $data,’headers’ => $header));

return json_decode($response->getBody()->getContents());

}

catch (RequestException $e)

{

$response = $this->StatusCodeHandling($e);

return $response;

}

}

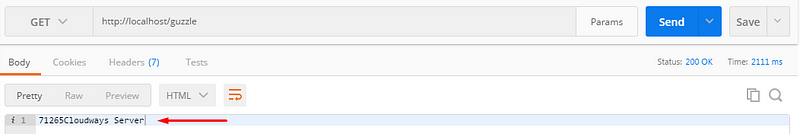

Now in the index.php, put this condition beneath the foreach loop.

if($server->id == ‘71265’ && $server->label == ‘Cloned-php applications’){

$label = ‘Cloudways Server’;

$changelabel = $cw_api->changelabel($server->id,$label);

}

When testing this in Postman, I will get the updated server label.

Create a Delete Request to Remove a Server

To delete a server using Cloudways API, I need to create a Delete

request in Guzzle through the following method. This is pretty similar

to the above method, because it also requires two parameters, server id

and label.

public function deleteServer($serverid,$label)

{

try

{

$url = self::API_URL . “/server/$serverid”;

$data = [‘server_id’ => $serverid,’label’ => $label];

$header = array(‘Authorization’=>’Bearer ‘ . $this->accessToken);

$response = $this->client->delete($url, array(‘query’ => $data,’headers’ => $header));

return json_decode($response->getBody()->getContents());

}

catch (RequestException $e)

{

$response = $this->StatusCodeHandling($e);

return $response;

}

}

Try this in Postman or just refresh the page. The server will be deleted.

Final Words

Guzzle

is a flexible HTTP client that you could extend as per your

requirements. You can also try out new ideas with uploading data, form

fields, cookies, redirects and exceptions. You can also create

middleware for authentication layer (if needed). All in all, Guzzle is a

great option for creating REST API in PHP, without using any

frameworks.

If you have any questions or query you can comment below.